Bridging Disciplines and Making New Connections to Understand Science Learning

April 5, 2021

Keywords: discipline-based education research, interdisciplinary, learning analytics, simulation

Target readers: general researchers

Author: Melanie Peffer

Melanie has a B.S. and Ph.D. in molecular biology from the University of Pittsburgh and completed postdoctoral training in learning sciences at Georgia State University. She is affiliated with the University of Colorado Boulder as a researcher in the Institute of Cognitive Science and teaches introductory biology as part of the Health Professionals Residential Academic Program. Dr. Peffer is the author of the best-selling trade book, Biology Everywhere: How the science of life matters to everyday life. When not writing, Melanie enjoys playing her flute and piccolo and enjoying all that Colorado has to offer in the great outdoors with her husband and son.

Photo Pittsburgh, PA — a city connected by bridges by Benjamin Rascoe (@dapperprofessional)

Bridging Disciplines and Making New Connections to Understand Science Learning

What do biology, learning sciences and learning analytics have in common?

Well, in my case – they are the pillars of my research program. And combining the three together provides new insights into how people learn and understand science. But I’ll get there in a minute – for now, how did I end up with a research program that sits comfortably in three disciplines?

I earned my PhD in molecular biology and realized part way through my graduate program I was far more interested in how people learned biology.

During the later half of graduate school, I volunteered in the education department at the Pittsburgh Zoo and PPG Aquarium. As I worked with middle school and high school students on the weekends and my dissertation research on the weekdays, I pondered how to translate what I did all week in a laboratory, into the classroom.

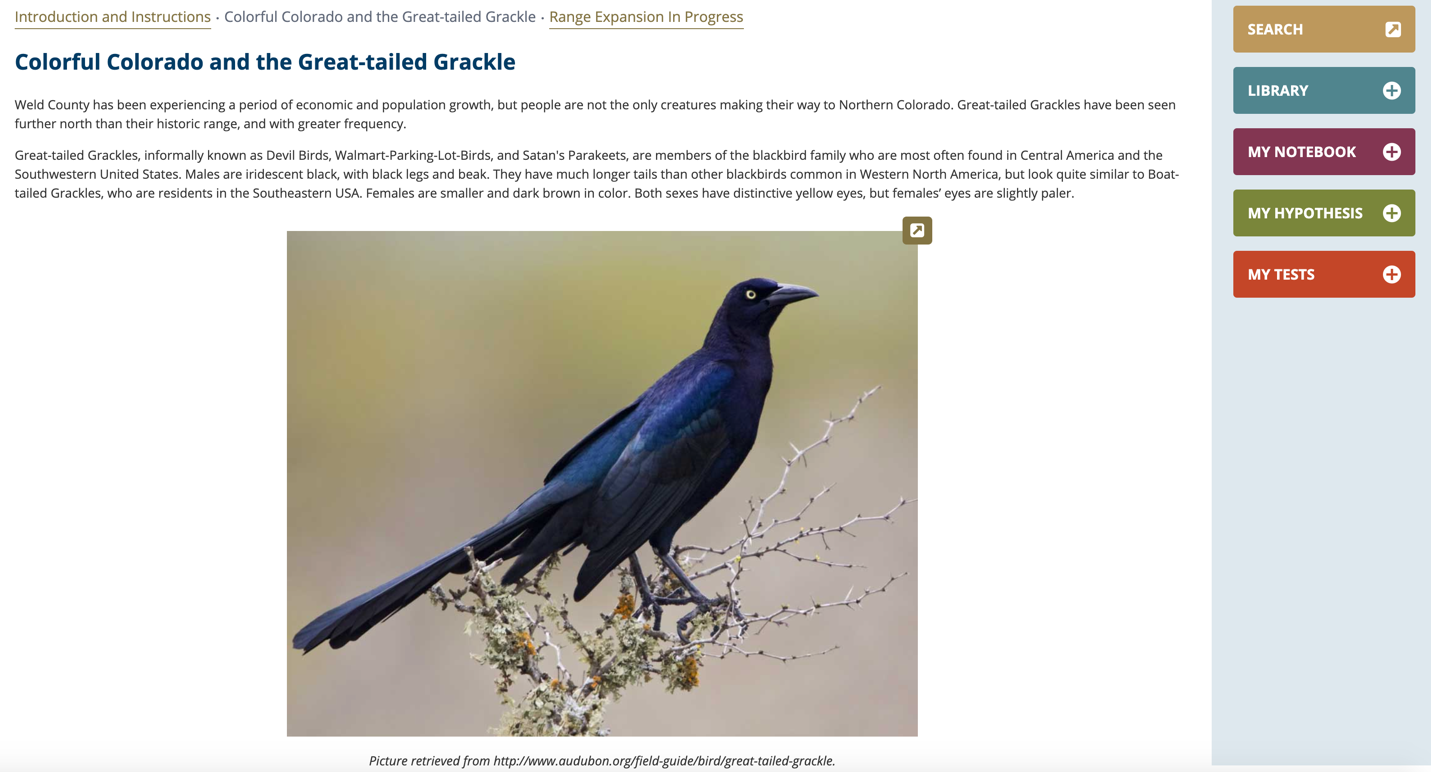

Or said otherwise, how we give authentic science experiences to students. This led to the genesis of the Science Classroom Inquiry (SCI) simulations. SCI simulations give students an authentic science inquiry experience as a WebApp. Students are positioned as scientists tasked with solving some real-world unstructured problem. Much like real world problems, there isn’t an answer at the end either. The authenticity is derived from having multiple methods for completing the simulation, the ability to fail and start over, and the lack of a final answer.

Figure 1. Screenshot from Invasion of the Grackles SCI Simulation

I wanted to measure epistemological beliefs about science, or beliefs that individuals have about the nature of science knowledge and how that knowledge is created through inquiry. Understanding beliefs individuals have about science knowledge is important for knowing how to teach science as well as cultivating a scientifically literate society.

Beliefs are difficult to measure. Sometimes our beliefs aren’t fully realized. Or, when given a metric, an individual will respond with the perceived “right” answer, rather than what they actually believe.

Prior theoretical work suggested that looking at what students do in an authentic science practice (like that provided by SCI), was a better measure of what they believed. During my postdoctoral appointment, I struggled to figure out exactly how to achieve this. How could I use practices in SCI to understand what people believed about the nature of science knowledge?

I first heard about learning analytics at the Computer Supported Collaborative Learning in Gothenburg, Sweden in 2015. It was an Ah-ha! moment for sure. I realized that analytics techniques like natural language processing and clustering could be utilized to understand epistemological beliefs about science.

Here I summarize some of my prior work at the intersection of biology, learning sciences, and learning analytics. I also provide some perspectives on the rise of discipline-based education research (DBER) and how learning analytics can be leveraged to understand how learning works in science classrooms, and ultimately provide guidance on teaching in science classrooms.

Language as proxy for belief

As part of the SCI simulation, students are frequently prompted to respond to prompts. These include explaining rationale for their hypotheses, interpretations of data discovered during inquiry, and also their final conclusions.

How we talk about science matters when understanding beliefs about the nature of science knowledge. Some advocate for the banishment of words like “prove” “correct” and “right/wrong” because they send the wrong idea about the nature of science knowledge. Science knowledge changes over time in light of new evidence. But thinking we have “correct” answers can conflict with this idea.

Given the importance of language, we used a natural language processing tool called TAALES followed by a keyness-like analysis to pick out commonly used verbs to examine language used by novices (meaning, they had no experience with authentic science inquiry) and experts (meaning, they had previously published a first author peer-reviewed article) when making their conclusions in SCI.

Our most important finding was that experts using more cautious, tentative language when making their conclusions. Experts commonly include phrases like “this may explain” or “supports my idea.” In contrast, novices rarely included tentative language. This suggests that using tentative language may indicated beliefs about science knowledge as changing in light of new evidence. It also demonstrates how analytics tools like TAALES can be leveraged for understanding one’s beliefs.

Machine learning to compare inquiry practices

One of the benefits of the SCI simulation platform is that it permits autonomy. Users can choose as many or as few tests as they like based on their hypothesis – and pursue any testing strategy they like. In early SCI pilot studies, we noticed that students had very different ways of completing the simulation. Later work demonstrated that experts differed from novices – and that what they did was predicted by how well they performed on a traditional assessment of their epistemological beliefs about science.

We could qualitatively see these differences – but how could we do quantitative analysis? Collaborating with a colleague in computer sciences, we used machine learning, particularly k-means clustering, to group students’ inquiry patterns together. When comparing the practices of non-science majors, advanced biology majors, and biology experts using k-means clustering, we observed three clusters. The clustering revealed? Inquiry patterns with high testing, high information seeking, and overall low activity. When combined with other data streams in the study, we noted that the differences in investigative strategy were not a factor of educational background or motivation to complete the study – but might be reflective of underlying beliefs about the nature of science knowledge.

Perspectives on DBER and Learning Analytics

Although I love working in disparate disciplines and connecting the pieces to ask new questions about how people learn and understand science, I find myself constantly defining “DBER” (discipline-based education research) and “learning analytics.” I usually give up and call myself a “learning scientist” which represents a third discipline – although one that recognizes multi-disciplinary efforts to understand learning.

Interdisciplinary research, or the intentional combination of different points of view in a way that creates a new product, is a powerful method for developing new theory, methods, and insights into learning. I’m a published molecular biologist – so I understand the methods, practices, and culture within the field. I’ve also formally trained in learning sciences and actively participated in the learning analytics community for over four years.

My work combines knowledge about how people learn and how we can use big data to understand learning, with my first-hand knowledge of what it means to engage in biology inquiry. So we can study how other people learn biology, and specifically how beliefs about the nature of science knowledge can be teased out in the context of biological inquiry.

My prior work – as well as the work of others – is demonstrating how learning analytics can yield new insights into how we learn science. This includes insight into measurement of constructs that can’t be directly measured, like beliefs about the nature of science knowledge.

Learning analytics and DBER are both relatively new fields of study. As we work to understand how people learn in the sciences to inform evidence-based teaching – while simultaneously trying to understand how to connect analytics to learning – the potential for synergies between the two fields is exciting.