Applying the Science of Data Analytics to Product Development

October 6, 2021

Keywords: edtech, evaluation, implementation science, learning design, learning sciences, research, social and emotional learning

Target readers: Researchers, practitioners, administrators, students

Author: Rachel Schechter

Dr. Rachel Schechter leads Learning Experience Design, Evaluation, and Consulting services at Charles River Media Group, acting as a Chief Research Officer “for hire” to companies large and small. Rachel helps guide the use of data and evaluation to support product improvements, build evidence of efficacy, and strengthen customer implementations. Rachel has led research teams evaluating multimedia educational programming for learners of all ages since 2007 and most recently was the Vice President of Learning Sciences at Houghton Mifflin Harcourt. Her research often examines motivation, feedback, and learning in blended learning environments, and she has published several research articles evaluating formal and informal educational programming.

Follow Rachel on LinkedIn

Applying the Science of Data Analytics to Product Development

What do product improvement, product efficacy, and product implementation all have in common? Using data! Anyone working on an online product needs to monitor who is using what, when, for how long, and how did it go. If you are looking for new ways to understand and leverage user data to understand engagement and learning, you’ll want to check out these stories about how I combine evaluation, learning, and implementation sciences to engage users and improve outcomes.

While climbing up the ranks in educational technology research for the last 10 years, I’ve seen that improving educational outcomes requires pulling research and methodologies from a variety of fields. The three I’ve focused on over the years are evaluation sciences, implementation sciences, and learning sciences. While deep expertise in at least one of these realms is helpful, an EdTech research leader needs to integrate ideas from complementary scientific fields including psychology, economics, and marketing.

While these sciences are listed separately, a single person or team may employ methods and resources from each at different stages of the development process. Let’s walk through each science, what it is, what it looks like in EdTech, and how to learn more about it.

Evaluation Sciences

I first explored evaluation sciences while working on my dissertation at Tufts University about the use of educational music videos in the preschool show Jim Henson’s Sid the Science Kid (thank you Mr. Rogers Scholarship).

Research suggests that young children don’t understand song lyrics; for example, they can understand the ABCs but not understand that the phrase “LMNOP” represents five different letters. What could children understand about science, though, from the 90-second music video’s visuals? And was that learning significantly different from watching a 12-minute episode?

I found that teachers could successfully teach preschoolers simple engineering concepts just as well with the short music video as with the long episode, meaning that kids could learn how a pulley system worked in 88% less time.

However, I found that kids who only watched the music video did not pick up on the scientific vocabulary. Why? Because of cognitive overload: they couldn’t process the music, lyrics, and visuals all at the same time.

Children can learn a lot from visuals, and rather than using terms like “rope” and “axle” they described those objects using their own words (“string,” “metal thing,” “round gray thing”). I recommend that teachers (or a character guide) preview any academic vocabulary before and after the music video to support understanding and retention.

Learning Sciences

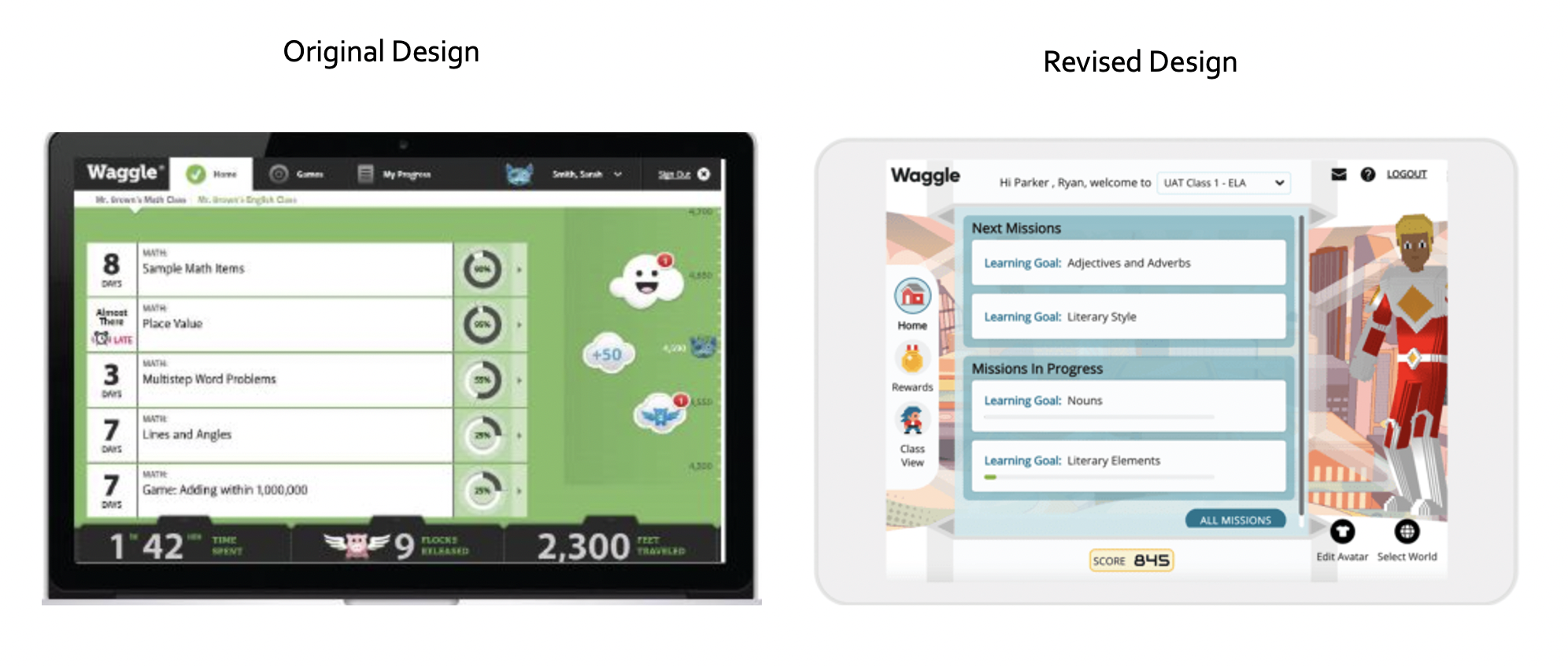

Learning sciences integrates principles of learning across systems and disciplines and focuses on realistic learning environments that use technology. At Houghton Mifflin Harcourt, I guided the Waggle (ELA and math practice program) product design team when they took on the task of reimagining their student user experience using the newly created HMH Learning Sciences Pillars RAMP.

With a goal of student engagement, we focused on self-determination theory to inform the student dashboard design and better support students’ autonomy, competence, and sense of belonging (which foster intrinsic motivation). Data analytics can increase or diminish intrinsic motivation through its use of data visualization and design. For example, in the original design, five rows of numbers and donut graphs felt overwhelming and lacked direction (reducing a student’s sense of autonomy). In the revised design, only two “missions in progress” were shown and student progress was indicated using a bar graph that more closely represents a journey with an end.

In the original design, five different scores and points were placed on the right side and the bottom of the screen (this is a lot for a student to process). To better support a student’s sense of competence, the revised design featured a single score at the bottom of the screen and a focus on the learning goals for current and future missions instead of a collection of metrics. Probably the biggest change from the original to the revised design was the student’s ability to design a custom avatar, which better supported their sense of belonging through its full-size featured place on the right side of the screen. I’m thrilled to share that Waggle was CODIE 2021 finalist for Best Gamification in Learning!

Implementation Sciences

Implementation sciences are the study of methods that support the application of research findings and best practices (Carnegie Foundation for the Advancement of Teaching has a nice intro blog about it here). Educational technology research is a great venue for its application because evaluation research identifies the best practices, learning sciences helps us design products with those in mind, and then the last is figuring out how to help increase the uptake of those best practices.

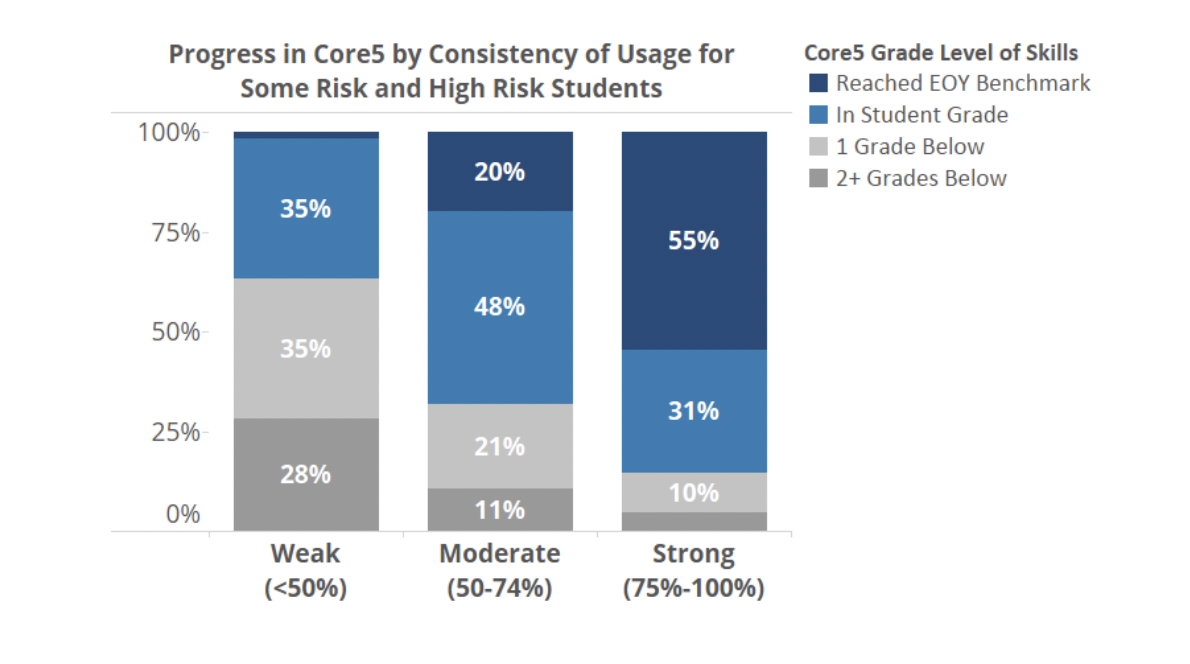

At Lexia Learning, I was tasked with creating individualized prescriptions for Lexia Reading Core5 so students who were farther behind were prescribed more usage time to match the intensity of instruction that they needed. While that logic makes perfect sense and was commonly described in Response to Intervention Models (RtI) or Multi-Tiered Systems of Support (MTSS), it was quite radical to have four different usage times in one classroom for the same app!

We saw right away that changing user behavior (both teacher and student) would not happen on its own. I created a piece called “Every Minute Counts” that showed how when students met their recommended minutes consistently, the group made much more progress and succeeded as a group more often. It took a few years to get this message clear and powerful, and when we did, this graph became a staple in every launch training!

Context, Context, Context

In addition to all the scientific frameworks, at this moment in EdTech development it is critical to include Social and Emotional Learning (SEL) and Employee Engagement, which both include a Diversity, Equity, and Inclusion lens (DEI). A few thoughts on these:

- SEL considers social and emotional awareness and management, individual variability, and local context for learners and educators. Researchers partner with Product, Content, Professional Learning, Sales, and Marketing to elevate user voice to inform decision making that best meets the needs of users (learners and educators). While we empathize with our users, we also maintain boundaries for ourselves to maintain psychological safety and manage our own mental health. (Check out this resonating podcast episode by Alicia Quan featuring Amanda Rosenburg telling her story about empathizing with users at the start of the pandemic.)

- To engage employees, EdTech companies need to foster an environment for diversity, equity, and inclusion for team members company-wide. Researchers can partner with Human Resources, Learning and Development, and other Support teams to inform strong communication patterns, staff development planning, and collaborate on collecting data through interviews, focus groups, and surveys.

The work I’ve done in the last 10 years has brought together evaluation, implementation, and learning sciences to create engaging and effective learning tools. Organizations need to break down the silos and form collaborations that keep the information flowing through the research teams so that data, analytics, and insights from different sources inform decisions.

The US EdTech market is approaching a spending peak that will not sustain once pandemic relief funds expire. More than ever before, school leadership will be looking for evidence before buying. The methods, the information, and the tools are available, much of the information I’ve shared today is free, and tools are more affordable and friendly to use than even a decade ago. EdTech researchers can help schools make effective toolkits that meet educators’ and students’ evolving needs. It’s an exciting time to be in EdTech research!

Summaries Explaining Evaluation, Learning, and Implementation Sciences

Evaluation Sciences uses and builds evidence to learn what works and what doesn’t, for whom, and under what circumstances. Evaluation can happen anywhere and everywhere that data can be collected, stored, and compared. Product evaluation works best when data collection systems can inform iterative and continual product development. When implementing design changes, include an analysis plan for how you will measure the impact of those changes. The American Evaluation Association provides several great frameworks. Also, see evaluation scholar Michael Quin Patton and data viz guru Stephanie Evergreen to get started.

Learning Sciences explores concepts related to the understanding of the learning process, pulling together cognitive psychology, neuroscience, educational psychology, and other disciplines. Learning Scientists inform data architecture, advise on reporting for educators and administrators, establish and review skill progressions and mastery models, and inform data analytics plans for product improvement. Digital Promise has excellent resources about Learning Sciences for educators, while the Learning Scientists (learningscientists.org) is more academic-focused.

Implementation Sciences facilitates the uptake of evidence-based practices into users’ applications of products. Implementation studies are often conducted in between product sprints, to help inform prioritization and understand what may or may not be working with product design, training, or support. While looking at a whole user population is fast, organizing users into subgroups using personas or various profiles as a guide can help you find inspirational stories that can drive implementation improvements.EdSurge has put together a personalized learning toolkit that walks through the inclusion of implementation science. The Learning Accelerator has a helpful article about measuring the quality and progress towards stronger implementations.