SoLAR Webinars

About SoLAR Webinar Series

The Society for Learning Analytics Research is proud to present a new webinar series which will promote high-quality research and practice in Learning Analytics. Webinars are 30-45 min online presentations on some of the key issues and trends in the Learning Analytics field followed by live Q&A with the audience.

To get notified about our upcoming webinars, you can subscribe to our newsletter and you will get email notifications for upcoming webinars. All webinars are run on Zoom platform and the recordings of all webinars are available on our Youtube channel.

Upcoming Webinar

While learning analytics dashboards (LADs) are the most common form of LA intervention, there is limited synthesis of the evidence regarding their impact on students’ learning outcomes. In this webinar, Rogers Kaliisa (University of Oslo) and Mohammad Saqr (University of Eastern Finland) will present their LAK24 Best Paper, which synthesizes the findings of all studies that investigated the impact of LADs on students' learning outcomes which includes learning outcomes, participation, motivation, and attitudes. The presenters will discuss in detail the current status of evidence and whether it supports the notion that LADs are effective or can improve students' learning. The presenters will also discuss the future implications of their work and what it means for future research on LADs and learning analytics at large.

Mohammed Saqr is an Associate Professor of Computer Science at the School of Computing, University of Eastern Finland. He leads UEF’s Learning Analytics Unit, which is one of Europe's most active learning analytics laboratories. Saqr has published extensively on learning analytics, network analysis and artificial intelligence. He also authored two books on learning analytics methods. His work has received several international recognitions e.g., SOLAR Europe emerging scholar in 2023 and several best paper awards (e.g., best paper at LAK, ICCE, SITE, TEEM) and received funding from the research councils of Finland and Sweden. He is on the editorial boards of several journals e.g., BJET, TLT and PLOS One and has chaired several conference tracks and proceedings. He is currently working on advancing transition network analysis method and all issues related to complexity and individual differences. You can know more about Saqr work here.

Rogers Kaliisa is a Postdoctoral Researcher at the Department of Education, University of Oslo. His research focuses on learning analytics, mobile learning, and computer-supported collaborative learning. Kaliisa is an active member of the international research community, serving on several society boards, including the Society for Learning Analytics Research (SoLAR), where he has contributed for four years as a Student Representative and Member at Large. He is also a co-founder of the African Network for Learning Analytics (ANLAR), a special interest group within SoLAR dedicated to advancing learning analytics research and practice across Africa. Additionally, he serves on the editorial board of the Journal of Learning Analytics. His work has gained international recognition, earning him the Best Full Paper Award at LAK24 in Kyoto, Japan. Currently, his research explores how multimodal analytics can support and improve group collaboration and learning dynamics in professional higher education settings.

Past Webinar Recordings

Webinar 26: Have Dashboards Lived Up to the Hype?

While learning analytics dashboards (LADs) are the most common form of LA intervention, there is limited synthesis of the evidence regarding their impact on students’ learning outcomes. In this webinar, Rogers Kaliisa (University of Oslo) and Mohammad Saqr (University of Eastern Finland) will present their LAK24 Best Paper, which synthesizes the findings of all studies that investigated the impact of LADs on students' learning outcomes, participation, motivation, and attitudes. The presenters will discuss in detail the current status of evidence and whether it supports the notion that LADs are effective or can improve students' learning. The presenters will also discuss the future implications of their work and what it means for future research on LADs and learning analytics at large.

Webinar 25: Addressing the Digital Divide to Support Learning Analytics Adoption in the Global South

In recent years, Learning Analytics (LA) has emerged as a powerful tool for enhancing educational practices, improving student outcomes, and informing policy decisions. However, the persistent digital divide often restricts the adoption of LA technologies, particularly in regions of the Global South. In his talk, Rafael Ferreira Mello explores the critical barriers that limit the widespread implementation of LA, including issues related to infrastructure, access to technology, and digital literacy. Focusing on practical solutions, he discusses how addressing these challenges can foster more equitable access to data-driven insights and improve educational outcomes in under-resourced contexts. Drawing on case studies from the Brazilian Ministry of Education, this presentation will offer strategies for overcoming these barriers and reaching students in low-resource environments.

Webinar 24: Dos and Don'ts for having your paper accepted at LAK

LAK25 PC Chairs, Jelena Jovanovic, Alejandra Martinez-Mones, Caitlin Mills and Xavier Ochoa together with Grace Lynch and Nicole Hoover from the Organizing Committee shared the ins and outs of the LAK process. The PC chairs discussed the Dos and Don'ts of the submission process but we also how to write a good paper that has chances of being accepted at the conference.

Webinar 23: Learning and Regulating with ChatGPT: What an Experimental Study Tells Us

The advances in artificial intelligence (AI) have profoundly transformed and will continue to influence the workforce by automating numerous tasks across various sectors. Consequently, it is vital for students and professionals to develop the capability to “learn and work with AI,” a focus that has increasingly become central in educational paradigms. As the practice and research of AI-assisted learning evolve, a significant advancement in learning analytics is the capacity to measure and understand how learning occurs with AI scaffolding. Nevertheless, empirical research in this area remains nascent, calling for further exploration.

In this webinar, Dr. Fan will present his recently concluded SoLAR Early Career Research Grant 2023 project, which centers on understanding learners' interactions and regulation using ChatGPT. He and his colleagues conducted an experimental study involving 117 learners, who were randomly assigned to one of four groups, each provided with different forms of learning support (e.g., ChatGPT and human experts). His presentation will share insights into how these groups compare in terms of self-regulated learning processes, help-seeking behaviors, self-assessment skills, and overall learning performance. Additionally, Dr. Fan will discuss the promises and challenges of using generative AI in education that identified in his empirical study.

Webinar 22: Reframing thinking about and modeling learning through complex dynamical systems

Learning is a highly individual process of change that emerges from multiple interacting components (e.g. cognitive, social) that occur at varying levels (e.g., individual, group) and timescales (e.g. micro, meso, macro) in constantly changing environments. Due to its complexity, the theoretical assumptions that describe learning are difficult to computationally model, and many existing methodologies are limited by conventional statistics that do not adhere to these assumptions. In recent years, the learning analytics community has explored the potential of complex dynamical systems for modeling and analyzing learning processes. Complex dynamical systems (CDS) approach refers to theoretical views, largely from physics and biology, that preserve the complexity of learning and could be potentially useful in studying socio-/ technical-/ material-/ symbolic systems that learn.

The integration of CDS into analytical and methodological tenets of LA research is ongoing. Yet, CDS concepts and accompanying methods remain mostly “under the radar” of a larger learning analytics community. In this webinar, Elizabeth Cloude (Tampere University), Jelena Jovanovic (University of Belgrade) and Oleksandra Poquet (Technical University of Munich) will introduce the main theoretical underpinnings and methodological toolkit of Complex Dynamical Systems (CDS), and highlight their relevance to and potential integration with learning analytics. They will present key opportunities for thinking about and modeling learning through CDS concepts and briefly review CDS-inspired research from works-in-progress presented at the latest workshop on CDS in learning analytics.

Webinar 21: Modeling Learning in the Age of ChatGPT

ChatGPT is the new (and most well-known) AI tool that can whip up an essay, a poem, a bit of advertising copy—and a steady boil of hype and worry about what this will mean for education in the future. This talk looks at what ChatGPT and AI models are really doing, what that means for the future of education — and how we can model, study, and assess learning in the world that ChatGPT is helping us create. Join us for a Feature Webinar as faculty director David Williamson Shaffer discusses implications and potential use for harnessing ChatGPT.

Webinar 20: Unveiling the Power of Affect during Learning

In the realm of education, affect has long been acknowledged as a significant factor that impacts learning. Represented by cognitive structures in the mind, affect is described as a mood, feeling, or emotion, which transmits information about the world we experience and compels us to act and make decisions. Research finds that an inability (or ability) to regulate affect (e.g., confusion or frustration) can greatly impact how an individual learns with educational technologies (e.g., intelligent tutoring systems, game-based learning environments, MOOCs). Yet, there are significant theoretical, methodological, and analytical challenges impeding our understanding of how to best identify (and intervene) if and when affect becomes detrimental during learning with educational technologies.

In the "Unveiling the Power of Affect during Learning" webinar, Elizabeth Cloude will discuss state-of-the-art research findings, theoretical, methodological, and analytical approaches, and their challenges. Next, an interdisciplinary perspective that merges an affect framework with complex adaptive systems theory will be presented and two illustrative cases will be demonstrated. Finally, new research tools and directions will be considered for examining how the design of educational technologies can positively influence affect, engagement, knowledge acquisition, and learning outcomes. Opportunities and challenges regarding affect learning analytics will be discussed.

Webinar 19: Socio-spatial Learning Analytics for Embodied Collaborative Learning

Embodied collaborative learning (ECL) provides unique opportunities for students to practice key procedural and collaboration skills in co-located, physical learning spaces where they need to interact with others and utilise physical and digital resources to achieve a shared goal. Unpacking the socio-spatial aspects of ECL is essential for developing tools that can support students' collaboration and teachers' orchestration in increasingly complex, hybrid learning spaces. Advancements in multimodal learning analytics and wearable technologies are motivating emerging analytic approaches to tackle this challenge.

In this SoLAR webinar, Lixiang Yan will introduce a conceptual and methodological framework of social-spatial learning analytics that map from social-spatial traces captured through wearable sensors to meaningful educational insights. The framework consists of five primary phases: foundations, feature engineering, analytic approaches, learning analytics, and educational insights. Two illustrative cases will be presented to demonstrate how the framework can support educational research and the formative assessment of students' learning. Finally, the opportunities and challenges regarding socio-spatial learning analytics are discussed.

Webinar 18: Can theories of extended cognition inspire new approaches to writing analytics?

Much of writing analytics has been based on assumptions that words represent concepts held by the writer, and that identification of concepts can tell us something about the writer's learning. The heart of this idea is that the brain makes an internal model that represents the external world, and that language allows expression of that internal representation such that others can share understanding. Thus, as the learner learns, their conceptual model develops, and we assess the extent of that development through the learner's communication. In the case of writing analytics, the learner's linguistic expression. This theory has been highly successful and has allowed significant advancement in the analysis of learner linguistic expression.

However, much of the success of this internalist representational approach is found in learning contexts that are tightly scoped with a relatively high degree of predictability in learner behaviour. As a result, many analytics technologies are dependent on these limitations. For example, the vast majority of natural language processing technologies that underpin writing analytics require the writer to hold a reasonable command of a common language such as English. This technology typically performs poorly with poor expression (such as inappropriate grammar or frequent mis-spelling), and often very poorly with non-pervasive languages (such as those from small non-western nations). Apart from the obvious equity issues with marginalising learners who do not have a reasonable command of a dominant language, this representational limitation can result in a disconnect between the subsequent analytics and the reality of the learner. This disconnect is exacerbated when the representations are limited to concepts that are assumed to be representations of an external world. In particular, this is a significant issue in situations that are highly contextual, such as when a learner engages in a process of personal reflection. While arguably critical to learning, quality reflection can be ill-defined, general in scope, emotionally laden, and involve multiple layers of conceptual complexity that are difficult to reconcile with specific linguistic features. Apart from simple recounts of events, reflection tends to extend well beyond the expression of mental representations of an external world, and thus demands a re-think of the assumptions fundamental to its analysis.

In this SoLAR webinar, Dr. Andrew Gibson uses his research in reflective writing analytics as an example of how engaging with non-representational theories of extended cognition might allow writing analytics to overcome some of its current limitations. He introduces some of the key features of 4e cognition theories, and highlights their potential benefits for writing analytics. Such theories demand new approaches to modelling, so Andrew shows how he is using principles derived from complex adaptive systems to align with 4e cognition in order to model reflexivity in learners.

Webinar 17: Unpacking Privacy in Learning Analytics for Improved Learning at Scale

Addressing student privacy is critical for large-scale learning analytics implementation. Defining and understanding what privacy means as well as understanding the nature of students’ privacy concerns are two critical steps to developing effective privacy-enhancing practices in learning analytics. This presentation will focus on the findings from two studies. First, we will present our preliminary results on how privacy has been defined by LA scholars, and how those definitions relate to the general literature on privacy. Second, a validated model that explores the nature of students’ privacy concerns (SPICE) in learning analytics in the setting of higher education will be presented. The SPICE model considers privacy concerns as a central construct between two antecedents—perceived privacy risk and perceived privacy control, and two outcomes—trusting beliefs and non-self-disclosure behaviours. Partial Least Square results show that the model accounts for high variance in privacy concerns, trusting beliefs, and non-self-disclosure behaviours. They also demonstrate that students’ perceived privacy risk is a firm predictor of their privacy concerns. Further, a set of relevant implications for LA designers will be offered.

In this SoLAR webinar, Dr. Olga Viberg presents future research directions, with a focus on a value-based approach to privacy in learning analytics.

Webinar 16: Online and automated exam proctoring: the arguments and the evidence

The emergence of online exam proctoring (aka remote invigilation) in higher education may be seen as a function of multiple interacting drivers, including:

- the rise of online learning

- emergency exam measures required by the pandemic

- cloud computing and the increasing availability of data for training machine learning classifiers

- university assessment regimes

- rising concerns around student cheating

- accountability pressures from accrediting bodies

Commercial proctoring services claiming to automate the detection of potential cheating are among the most complicated forms of AI deployed at scale in higher education, requiring various combinations of image, video and keystroke analysis, depending on the services. Moreover, due to the pandemic, they were introduced in great haste in many institutions in order to permit students to graduate, with far less time for informed deliberation than would have been expected. Consequently, there was significant controversy around this form of automation, with protests at some universities seeing withdrawal of the services, and research beginning to clarify the ethical issues, and produce new empirical evidence.

However, numerous institutions are satisfied that the services they procured met the emergency need, and are continuing with them, which would make this one of the ‘new normal’ legacies of the pandemic. Critics ask, however, whether this should become ‘business as usual’. Regardless of one’s views, the rapid introduction of such complex automation merits ongoing critical reflection.

SoLAR was delighted to host this panel, which brought together expertise from multiple quarters to explore a range of questions, arguments, and what the evidence is telling us, such as...

- This is just exams and invigilation in new clothes, right? They’re not perfect, but universities aren’t about to drop them anytime soon, so let’s all get on with it…

- Are there quite distinct approaches to the delivery of such services that we can now articulate, to help people understand the choices they need to make?

- What ethical issues do we now recognise that were perhaps poorly understood 2 years ago — or simply couldn’t afford to engage with in the emergency, but which we must address now?

- What evidence is there about the effectiveness of remote proctoring — automated, or human-powered — at reducing rates of cheating?

- What answers are there to the question, “Should we trust the AI?” Are we now over (yet another) AI hype curve, and ready for a reality check on what “human-AI teaming” looks like for online proctoring to function sustainably and ethically?

- What (new?) alternatives to exams are there for universities to deliver trustworthy verification of student ability, and what are the tradeoffs?

- Who might be better or worse off as a result of the introduction of proctoring?

This panel brought rich experience on the frontline of practice, business and academia:

Phillip Dawson is a Professor and the Associate Director of the Centre for Research in Assessment and Digital Learning, Deakin University. Phill researches assessment in higher education, focusing on feedback and cheating, predominantly in digital learning contexts. His 2021 book “Defending Assessment Security in a Digital World” explores how cheating is changing and what educators can do about it.

Jarrod Morgan is an inspiring entrepreneur, award-winning business leader, keynote speaker, and chief strategist for the world’s leading online testing company. Jarrod founded ProctorU in 2008, and in 2020 led the company through its merger and evolution into Meazure Learning. In his role as chief strategy officer, he is a frequent speaker for the Online Learning Consortium (OLC), the Association of Test Publishers (ATP), Educause, and many others. He has appeared on PBS and the Today Show, and has been covered by the Wall Street Journal, The New York Times, and is a columnist with Fast Company through their Executive Board program.

Jeannie Paterson is Professor of Law and Co-Director of the Centre for AI and Digital Ethics, University of Melbourne. She teaches and researches in the fields of consumer protection law, consumer credit and banking law, and AI and the law. Jeannie’s research covers three interrelated themes: The relationship between moral norms, ethical standards and law; Protection for consumers experiencing vulnerability; Regulatory design for emerging technologies that are fair, safe, reliable and accountable. She recently co-authored “Good Proctor or “Big Brother”? Ethics of Online Exam Supervision Technologies”.

Lesley Sefcik is a Senior Lecturer and Academic Integrity Advisor at Curtin University. She provides university-wide teaching, advice, and academic research within the field of academic integrity. She is a Homeward Bound Fellow and a Senior Fellow of the Higher Education Academy. Dr. Sefcik’s professional background is situated in Assessment and Quality Learning within the domain of Learning and Teaching. Current projects include the development, implementation and management of remote invigilation for online assessment, and academic integrity related programs for students and staff at Curtin. She co-authored “An examination of student user experience (UX) and perceptions of remote invigilation during online assessment”.

(Chair) Simon Buckingham Shum is Professor of Learning Informatics and Director of the Connected Intelligence Centre, University of Technology Sydney, where his team researches, deploys and evaluates Learning Analytics/AI-enabled ed-tech tools. He has helped to develop Learning Analytics as an academic field for the last decade, and has served two terms as SoLAR Vice-President. His background in ergonomics and human-computer interaction always draws his attention to how the human and technical must be co-designed to work together to create sustainable work practices. He recently coordinated the UTS “EdTech Ethics” Deliberative Democracy Consultation in which online exam proctoring was an example examined by students and staff.

Further resources shared during the webinar:

Webinar 15: What happens when students get to choose indicators on customisable dashboards

Learning analytics dashboards (LADs) are essentially feedback tools for learners. However, until recently, learners rarely have had a role in designing LADs, especially in deciding the information they would like to receive through such devices. Although learners might not always know what they need to self-manage their learning, we need to understand what type of feedback information and dashboard features they are likely to engage with and if, when, and how they use dashboards. To explore how LADs can better meet the needs of learners, we have embarked on two sets of studies aiming to understand students' information needs. In the first set of studies, we developed a customisable LAD for Coursera MOOCs on which learners can set goals and choose feedback indicators to monitor. We then analysed the learners' indicator selection behaviour to understand their decisions on the LAD and whether learner goals and self-regulated learning skills influence these decisions. The second line of study is a work in progress where we explore learners' study and feedback practices through interviews and surveys to identify information gaps that LA-generated feedback could cover. These studies highlight the role of both feedback literacy and data literacy on the perceived value students draw from LADs.

In this SoLAR webinar, Dr. Ioana Jivet and Dr. Jacqueline Wong discuss implications for our understanding of learners' use of LADs and their design while also suggesting potential dimensions for adapting such interventions.

Webinar 14: Where Learning Analytics and Artificial Intelligence meet: Hybrid Human-Ai Regulation

Hybrid systems combining artificial and human intelligence hold great promise for training human skills. In this keynote I position the concept of Hybrid Human-AI Regulation and conceptualize an example of a Hybrid Human-AI Regulation (HHAIR) regulation system to develop learners’ Self-Regulated Learning (SRL) skills within Adaptive Learning Technologies (ALTs). This example of HHAIR targets young learners (10-14 years) for whom SRL skills are critical in today’s society. Many of these learners use ALTs to learn mathematics and languages every day in school. ALTs optimize learning based on learners’ performance data, but even the most sophisticated ALTs fail to support SRL. In fact, most ALTs take over (offload) control and monitoring from learners. HHAIR, on the other hand, aims to gradually transfer regulation of learning from AI-regulation to self-regulation. Learners will increasingly regulate their own learning progressing through different degrees of hybrid regulation. In this way HHAIR supports optimized learning and transfer (deep learning) and development of SRL skills for lifelong learning (future learning). This concept innovative in proposing the first hybrid systems to train human SRL skills with AI. The design of HHAIR aims to contribute to four scientific challenges: i) identify individual learner’s SRL during learning; ii) design degrees of hybrid regulation; iii) confirm effects of HHAIR on deep learning; and iv) validate effects of HHAIR on SRL skills for future learning.

In this SoLAR webinar, Dr. Inge Molenaar outlines how to develop advanced measurement of SRL and algorithms to drive hybrid regulation for developing SRL skills in ALTs. The concept of Hybrid Human-AI Regulation has potential to support the development of SRL and specifically this HHAIR example outlines a reflection those principles.

Webinar 13: Learning Analytics for "end-users": from Human-centred Design to Multimodal Data Storytelling

The ultimate aim of most Learning Analytics innovations is to close the human-data loop by providing direct support to “end-users” (e.g., teachers and learners). However, presenting data to non-data savvy stakeholders is not a trivial problem. Trending topics in Learning Analytics, such as adoption, ethics, privacy, explainability of algorithms, and the intensive promotion of the use of dashboards, emphasise the importance of the human factors and the particularities of educational situations in the successful appropriation of Learning Analytics. Yet, Learning Analytics researchers, designers and practitioners do not need to reinvent methods to deconstruct learner interactions or to create effective user interfaces. The community can more actively consider well established human-computer interaction (HCI) and human-centred design methods to, for example, give students a voice in the design process of data-intensive tools, craft dashboards based on data visualisation principles, and identify authentic needs before creating the next artificial intelligence (AI) solution to be rolled to teachers.

In this webinar, Dr Roberto Martínez-Maldonado provides a high-level overview of the rapidly growing interest in Human-Centred Learning Analytics and potential ways in which the R&D in this area can evolve in the next years. Work in this area is embryonic, with some researchers advocating rapid prototyping with teachers and interviewing students to understand their disciplinary perspectives on data. I will present some examples from our own research in the areas of multimodal learning analytics and data storytelling, emphasising some lessons learnt so far and potential areas of future research.

Webinar 12: Analytics for Learning Design: An overview of existing proposals

In this webinar, Dr. María Jesús Rodríguez-Triana reflects on how TEL researchers have advocated for the alignment between learning design and analytics. This alignment may bring benefits such as the use of learning designs to better understand and interpret analytics in its pedagogical context (e.g., using student information set at design time). Conversely, learning analytics could help improve a pedagogical design, e.g., through detecting deviations from the original plan or identifying aspects that may require revision based on metrics obtained while enacting the design. Despite its clear synergies between boths fields, there are few studies exploring the active role that data analytics can play in supporting design for learning. This talk provides an overview of data analytics proposals, illustrating how they can enhance awareness and reflection about the learning design impact, properties and activity.

Webinar 11: Investigating persistent and new challenges of learning analytics in higher education

In this webinar, Prof Hendrik Drachsler will reflect on the process of applying learning analytics solutions within higher education settings, its implications, and the critical lessons learned in the Trusted Learning Research Program. The talk will focus on the experience of edutec.science research collective consisting of researchers from the Netherlands and Germany that contribute to the Trusted Learning Analytics (TLA) research program. The TLA program aims to provide actionable and supportive feedback to students and stands in the tradition of human-centered learning analytics concepts. Thus, the TLA program aims to contribute to unfolding the full potential of each learner. It, therefore, applies sensor technology to support psychomotor as well as web technology to support meta-cognitive and collaborative learning skills with high-informative feedback methods. Prof. Drachsler applies validated measurement instruments from the field of psychometric and investigates to what extent Learning Analytics interventions can reproduce the findings of these instruments. During this webinar, Prof Drachsler will discuss the lessons learned from implementing TLA systems. He will touch on TLA prerequisites like ethics, privacy, and data protection, as well as high informative feedback for psychomotor, collaborative, and meta-cognitive competencies and the ongoing research towards a repository, methods, tools and skills that facilitate the uptake of TLA in Germany and the Netherlands.

Webinar 10: Trust and Utility in Learning Analytics

In this webinar, Professor Sandra Milligan discusses trust and utility in Learning Analytics from the perspective of Measurement Sciences. Ever wondered why your brilliant learning analytics work fails to impact schools and colleges? This presentation brings a perspective on this problem, drawn from the measurement sciences. The measurement sciences provide the tools and mindsets that are used by educators to generate the trust and utility that is required to drive uptake of algorithms, apps and other outputs from learning analytics. Professor Milligan and her team work extensively in the education industry building trusted, useful assessments, apps and tools for assessing hard-to-assess competencies, such as general capabilities, 21st-century skills and professional competence. In this presentation, she identifies a series of 'faulty assumptions' that can be detected from time to time in the design of learning analytics projects. The assumptions relate to how learning, and the assessment of learning, are conceptualised, and how learning is best assessed in practice. She will touch on the ambiguous role of prediction in learning design, and the vital role of alternative hypotheses in ensuring trust and utility when using found data. She will point to some practical standards that can be used when designing projects, or reviewing them.

Webinar 9: Instrumenting Learning Analytics

This SoLAR webinar, led by Christopher Brooks invites the community to discuss approaches for Instrumenting Learning Analytics. As a field at the intersection of social and data sciences there is a strong need for quality instrumentation of teaching and learning. Yet, much of the work done in the field of Learning Analytics to date has not considered instrumentation directly, and instead has been built upon data which is the byproduct of learner activities, sometimes even pejoratively referred to as “data exhaust”. In this talk I will describe both a need for and an agenda toward exploring learning analytics instrumentation directly, where the creation, employ, and improvement of data collection instruments are of central interest. I will discuss methodological, architectural, and pragmatic considerations when it comes to the instrumentation of learning analytics systems and give specific thoughts on the need to understand and improve upon instrumentation choices when making theoretical and methodological decisions.

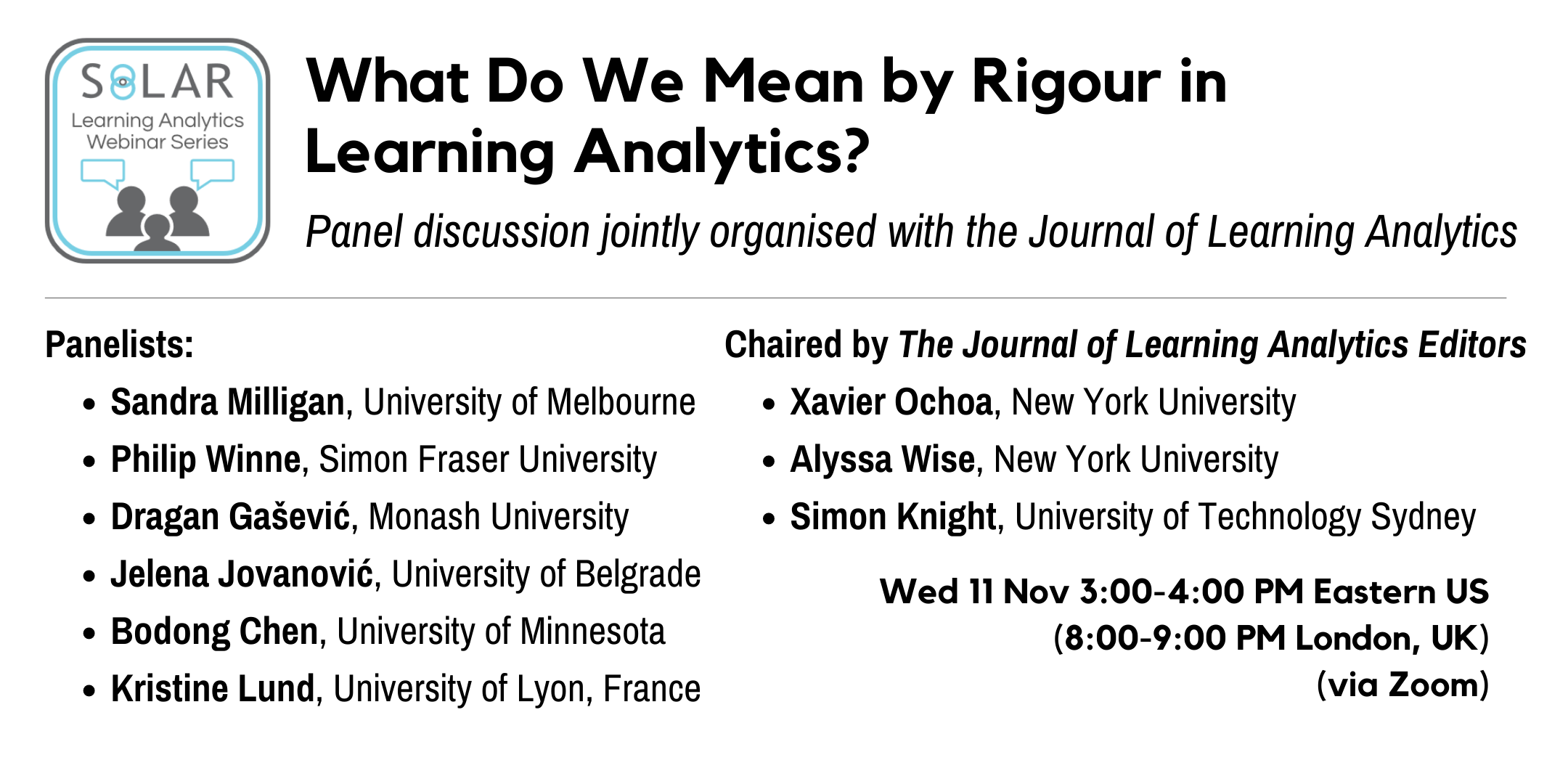

Webinar 8: What Do We Mean by Rigour in Learning Analytics?

This SoLAR webinar, led by Journal of Learning Analytics (JLA) editors Simon Knight, Xavier Ochoa and Alyssa Wise invites the community to engage with the complex question of what constitutes “rigorous research” in learning analytics (LA). LA is a highly interdisciplinary field drawing on machine-learning techniques and statistical analysis, as well as qualitative approaches, and the papers submitted to and published by JLA are diverse. While this breadth of work and orientations to LA are enormous assets to the field, they also create challenges for defining and applying common standards of rigour across multiple disciplinary norms. Extending the conversation begun in the 2019 editorial of JLA 6(3), this session will examine (a) indicators of quality that are significant in particular research traditions, (b) indicators of quality that are common across them, and (c) indicators of quality that are distinctive to the field of LA as a confluence of research traditions. This session will comprise brief presentations from 6 expert panelists in the field followed by a lively discussion among them with Q & A. This webinar will lay a foundation for future participatory sessions inviting conversation across the community more broadly.

Webinar 7: Does one size fit all? The experience of implementing an academic counselling system in three Latin American universities

When implementing a new learning analytics solution, we often look at successful experiences at other institutions and tend to expect similar outcomes in our own context. However, correctly identifying the unique aspects of a given institutional context can be a determining factor on the success or failure of implementation. In this webinar, we will present three case studies of learning analytics implementation at three different universities in Latin America. In all three cases, the same academic counselling tool developed in Europe was implemented, and we will examine the common and unique aspects of each institution that influenced the success of the learning analytics adoption. We also present the lessons learned to serve as guidelines for other institutions working on their own learning analytics tool implementation and adoption.

Webinar 6: Learning analytics adoption in Higher Education: Reviewing six years of experience at Open University UK

Video recording | PDF slides | QA responses

In this webinar, Prof Bart Rienties will reflect on the process of implementing learning analytics solutions within the UK higher education setting, its implications, and the key lessons learned in the process. The talk will specifically focus on the Open University UK (OU) experience of implementing learning analytics to support its 170k students and 5k staff. Its flagship OU Analyse has been hailed as one of the largest applications of predictive learning analytics at scale for the last five years, making OU one of the leading institutions in learning analytics domain. The talk will reflect on the strong connections between research and practice, educational theory and learning design, scholarship and professional development, and working in multi-disciplinary teams to explain why the OU is at the forefront of implementing learning analytics at scale. At the same time, not all innovations and interventions have worked. During this webinar, Prof Rienties will discuss the lessons learned from implementing learning analytics systems, how learning analytics has been adopted at OU and other UK institutions, and what the implications for higher education might be.

Webinar 5: Analyzing Learning and Teaching through the Lens of Networks with Sasha Poquet and Bodong Chen

Networks is a popular metaphor we use to make sense of the world. Networks provide a powerful way to think about a variety of phenomena from economic and political interdependencies among countries, interactions between humans in local communities, and to protein interactions in drug development. In education, networks give ways to describe human relationships, neural activities in brains, technology-mediated interactions, language development, discourse patterns, etc. The common use of networks to depict these phenomena is unsurprising given the variety of educational theories and approaches that are deeply committed to a networked view of learning. Compatible with this view, network analysis is applied as a method for understanding learning and connections involved in learning.

This webinar will explore the conceptual, methodological, and practical use of networks in learning analytics by presenting examples from real-world learning and teaching scenarios that cover the following areas. First, in learning analytics networks are a powerful tool to visually represent connections of all sorts in ways that are straightforward for humans to act upon. Second, network analysis offers a set of metrics that are useful for characterising and assessing various dimensions of learning. Third, the modelling of networks can help to develop explanatory theories about complex learning processes. We will present case studies in each area to demonstrate the utility of networks in learning analytics. By doing so, we argue for a wider conception of learning as a networked phenomenon and call for future learning analytics work in this area.

Webinar 4: Researching socially shared regulation in learning with Sanna Järvelä

Advanced learning technologies have contributed to progress in learning sciences research. There is growing interest in methodological developments using technological tools and multimodal methods for understanding learning processes (e.g. physiological measures). My research group has been working on collecting multimodal and multichannel data for understanding the complex process of collaborative learning. We have been especially interested in how groups, and individuals in groups, can be supported to engage in, sustain, and productively regulate collaborative processes for better learning. In this presentation, I will introduce the theoretical progress in understanding socially shared regulation (SSRL) in learning and review how we have been collecting, analyzing and triangulating data about the regulation in collaborative learning. I will discuss the current methodological challenges and opportunities learning analytics has helped us trace and model SSRL processes.

Webinar 3: Leveraging Writing Analytics for a More Personalized View of Student Performance

Researchers and educators have developed computer-based tools, such as automated writing evaluation (AWE) systems, to increase opportunities for students to produce natural language responses in a variety of contexts and subsequently to alleviate some of the pressures facing writing instructors due to growing class sizes. Although a wealth of research has been conducted to validate the accuracy of the scores provided by these systems, much less attention has been paid to the pedagogical and rhetorical elements of the systems that use these scores. In this webinar, I will provide an overview of case studies wherein writing analytics principles have been applied to educational data. I will provide an overview of multi-methodological approaches to writing analytics that rely on natural language processing techniques to investigate the properties of students’ essays across multiple linguistic dimensions. This approach focuses on the notion that there are multiple linguistic dimensions of the texts that students produce. Some surface-level features relate to the characteristics of the words and sentences in texts and can alter the style of the essay, as well as influence its readability and perceived sophistication. Further, discourse-level features can be calculated that go beyond the words and sentences. These features reflect higher-level aspects of the writing such as the degree of narrativity in the essay. Webinar attendees will gain a sense of both the conceptual issues and practical concerns involved in developing and using writing analytics tools for the analysis of multi-dimensional natural language data.

Webinar 2: Designing Learning Analytics for Humans with Humans

Learning analytics (LA) is a technology for enabling better decision-making by teachers, students, and other educational stakeholders by providing them with timely and actionable information about learning-in-process on an ongoing basis. To be effective LA tools must thus not only be technically robust but also designed to support use by real people. One powerful strategy for achieving this goal is to involve those who will (hopefully!) use the learning analytics in their design. This can be done by observing existing (pre-analytic) teaching and learning practices, gathering information from intended users, or directly engaging them in participatory design. Such attention to people and context contributes to the development of Human-Centered Learning Analytics (see the recent special section in JLA 6(2)).

In this webinar, I'll present a diverse set of examples of the ways that NYU's Learning Analytics Research Network (NYU-LEARN) is including educators and students in the process of building and implementing learning analytics. We'll look at examples of how to: involve students in the creation and revision of learning analytics solutions for their own use; work with instructors to align analytically available metrics with valued course pedagogy; and partner with an educational team to design and implement interventions based on at-risk students predictions. Webinar attendees will gain a sense of both the conceptual issues and practical concerns involved in designing learning analytics for humans with humans.

Webinar 1: Learning Analytics as Educational Knowledge Infrastructure

The emerging configuration of educational institutions, technologies, scientific practices, ethics policies and companies can be usefully framed as the emergence of a new “knowledge infrastructure” (Paul Edwards). The idea that we may be transitioning into significantly new ways of knowing – about learning and learners, teaching and teachers – is both exciting and daunting, because new knowledge infrastructures redefine roles and redistribute power, raising many important questions. What should we see when open the black box powering analytics? How do we empower all stakeholders to engage in the design process? Since digital infrastructure fades quickly into the background, how can researchers, educators and learners engage with it mindfully? This isn’t just interesting to ponder academically: your school or university will be buying products that are being designed now. Or perhaps educational institutions should take control, building and sharing their own open source tools? How are universities accelerating the transition from analytics innovation to infrastructure? Speaking from the perspective of leading an institutional innovation centre in learning analytics, I hope that our experiences designing code, competencies and culture for learning analytics sheds helpful light on these questions.