Making Learning Analytics “Irresistible”: Part 1

May 21, 2021

Keywords: human-centered design, innovation diffusion, learning analytics, work practices

Target readers: LA Designers, LA Researchers, LA Leaders

Author: Danny Liu

Position: Associate Professor (Education-Focused), The University of Sydney

Danny is a molecular biologist by training, programmer by night, researcher and academic developer by day, and educator at heart. A multiple international and national teaching award winner, he works at the confluence of learning analytics, student engagement, educational technology, and professional development and leadership to enhance the student experience.

Photo by American Heritage Chocolate on Unsplash

Making Learning Analytics "Irresistible": Part 1

For over a decade, learning analytics captured the imagination of instructors, students, educational administrators, and vendors, promising to help better understand and optimise learning. However, there are limited stories of large-scale success.

As learning analytics practitioners and researchers, we must use data to positively impact learners. Practically, we need to demonstrate return on investment to our stakeholders. Politically, governments are calling for more translational research. And frankly, we need to ensure that learning analytics stays relevant and meets the hope and hype of those at the centre of our work.

At the end of the day, learning analytics will be seen by instructors as another tool in their technological toolkit, and by students as another step in their learning journey. We'd love to have instructors tell us,

“the system is so well developed; the navigation is so transparent and self-explanatory and, most importantly, the functionality is so irresistible, and the applicability is so high that the temptation was too strong.”

Far from fiction, these words were expressed by a unit coordinator from the University of Sydney Business School about the learning analytics platform, SRES, in place across the university. So, how can we design learning analytics and its implementation so that instructors can't wait to use it and tell their colleagues about it?

What makes innovations irresistible?

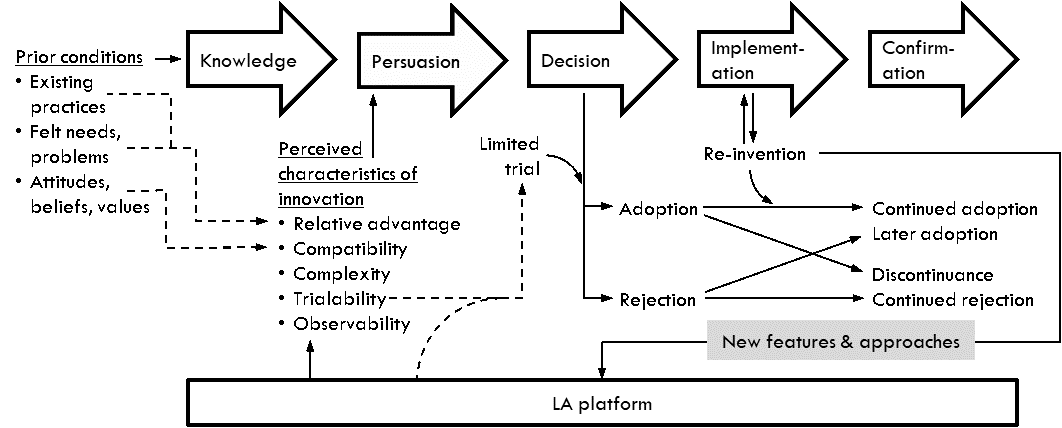

When I first discovered the field of learning analytics around 8 years ago, Leah Macfadyen and Shane Dawson's seminal 2012 paper Numbers are not enough introduced me to Everett Rogers' Diffusion of Innovation research. Rogers suggests that individuals move through five stages of an 'innovation-decision' process, where they first gain knowledge of the whys, hows, and whats of an innovation and then decide whether to adopt it into their practice.

The characteristics of an innovation play a crucial role in the innovation-decision process. Importantly and perhaps obviously, the innovation needs to allow a tangible improvement over an individual's current practices and meet their felt needs. Termed 'relative advantage', for instructors this might be to reduce workload while enhancing student support and feedback, or to give their teaching team the right data to foster positive relationships with students, or to electronically capture data that was physically recorded or otherwise siloed or unavailable.

Likewise, the innovation also needs to fit with current practices and values so that it is more understandable and applicable. That is, the innovation needs to have high 'compatibility' with instructors' beliefs and pedagogical approaches such as supporting students, promoting self-reflection, monitoring engagement, or fostering positive teacher-student connections. Compatibility can also be with more technical elements such as using spreadsheets or an LMS as part of teaching.

I’d like to posit that the innovation-decision process is central to making learning analytics irresistible to the people who are most deeply involved in designing and delivering education: the instructors themselves.

Case study: two LA systems that address instructors’ and students’ needs

AcaWriter (University of Technology Sydney) and the Student Relationship Engagement System (SRES) (University of Sydney and University of Melbourne) are two learning analytics software packages that have received strong uptake by instructors. They do very different things, but neither are 'traditional' learning analytics tools in the sense that they don't present dashboards of ‘data exhaust’ or demographics. Instead, they start from the pedagogical needs of instructors and students. Here I'd like to briefly use key components of the 'irresistibility model' (for lack of a better phrase), built upon Roger’s diffusion of innovation model, to analyse this success.

Learning Analytics Platforms:

| Component | AcaWriter (e.g. Shibani, et al. 2020) |

SRES (e.g. Arthars & Liu 2020) |

| Felt need | Instructors want to improve students' academic writing skills through more timely, accurate feedback on drafts. It is humanly impossible to provide this at scale, and instructors do not always feel confident coaching writing. | Instructors want to support and provide feedback to large cohorts, want to monitor student participation and engagement, and want to build better relationships with students. They want a single place to manage student data, especially at scale, and want to maximise efficiencies without compromising care and connection. |

| Relative advantage over existing practices | Provide feedback on specific features of academic writing at a scale, pace and detail that instructors cannot. Automated feedback reduced student requests for re-marking, saving instructors' time especially for larger cohorts. Students report that this is valuable feedback, and their writing improves. | Flexibly collect and curate data in one place, from students and teaching team (e.g. via online forms, rubrics, mobiles), LMS (e.g. synchronising engagement, submission, interaction data), spreadsheets, etc. Having data in one place saves time, reduces errors. Students can directly provide reflections and other data to instructors. Support messages can be personalised at scale and delivered via email and LMS via LTI. |

| Compatibility with instructor beliefs and values | Early adopter academics were already providing explicit writing instruction, resonated with AcaWriter’s concepts, and were open to new technologies. | Operating via spreadsheets, emails, and LMS pages is familiar. Focus on 'small data' that is of value to instructors in context, derived from the teaching team and students themselves and LMS. |

| Ability for a 'limited trial' (where just one aspect of the software is used) | Instructors can choose how much they adapt current writing activities and assessment rubrics to integrate the tool. | Instructors can choose one function to start with (e.g. attendance capture, email personalisation, student surveys, tailoring feedback, assessment marking) and extend their use as they become more comfortable. |

| Capacity for re-invention (modification of an innovation by users in the process of adoption and implementation) | New discipline-specific variations of the student writing ‘genres’ in AcaWriter are added through educator-researcher design sessions. Templates explain to academics the different levels of customisation possible, from simple to complex. | The software's flexibility allows many different uses which were not originally intended. Instructors share these new technology-supported pedagogical approaches with the community to encourage further innovation. |

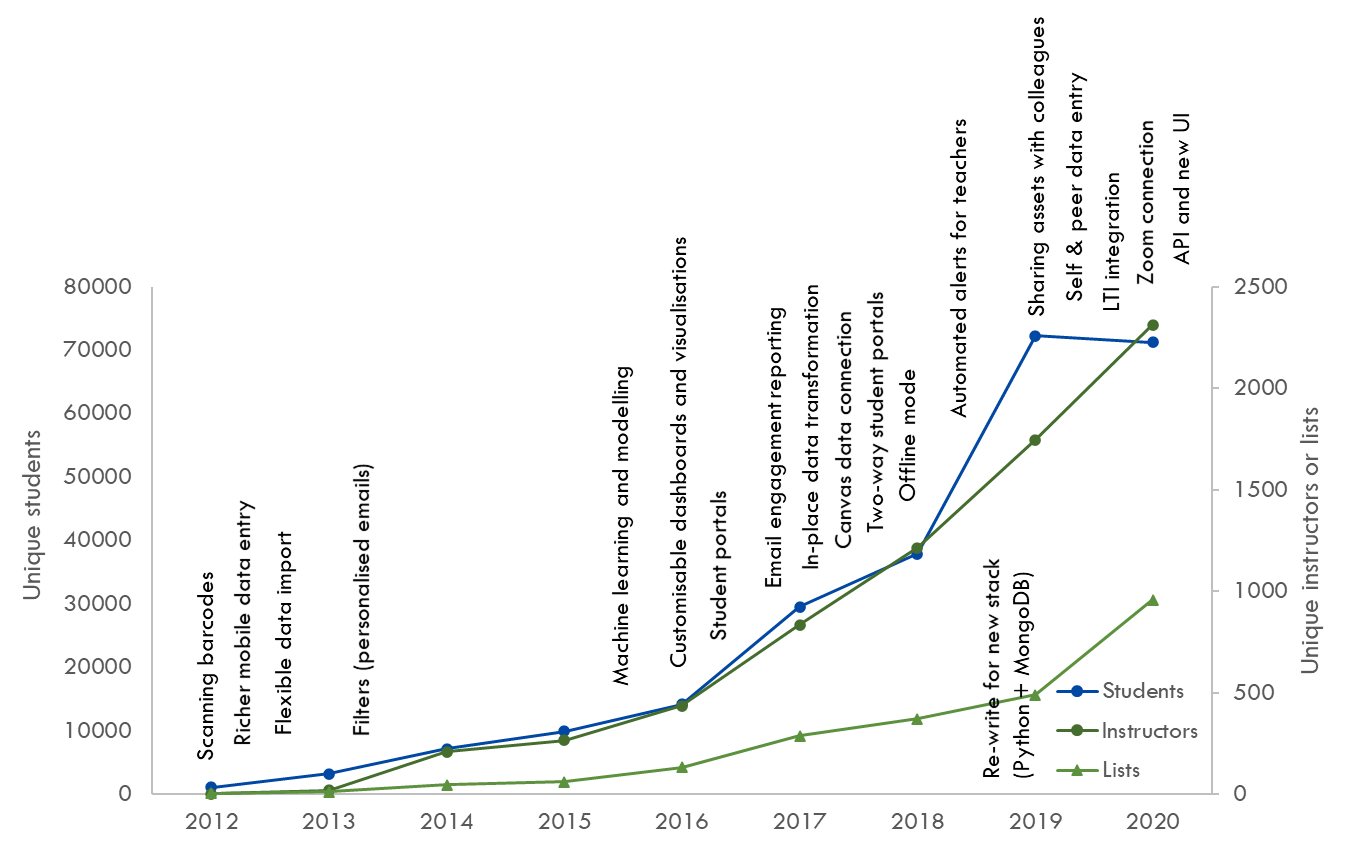

For the rest of this short piece, I’ll focus on the case of SRES (but see the end for more on the adoption dynamics of AcaWriter). The ability for instructors to only use certain functionality to address immediate needs meant that they could perform a 'limited trial' (per Rogers) and then ramp up the complexity of their use once they became more familiar with the software. For example, many instructors start using SRES just for attendance/participation tracking and simple email personalisation, but after a semester or two they start building marking forms and feedback portals to be embedded into LMS using LTI. From simple origins in 2012 as a mail merge and attendance capture tool, SRES has grown at the University of Sydney to cover almost all of our 70,000+ students, is used by 2,000+ staff, has 55,000+ active monthly users (students and instructors), and is being used at four other Australian institutions under a free licence.

“I think it's the first time that an online system, in particular a University one, aligns so exactly with what I was looking for, and has all the functions I was looking for out of the box.” Unit coordinator, Engineering.

Importantly, part of SRES' growth has been because the software itself has supported diffusibility, and an ecosystem has grown around it to support diffusion. Re-invention has been key to enabling this; Rogers writes that innovations that are more complex, have many applications, and can solve a wide range of problems are more likely to be re-invented. Moreover, a higher degree of re-invention leads to faster and more sustainable adoption of innovations. Re-invention has seen instructors turn SRES into an electronic photo book for teaching assistants to get to know their class better. It has seen SRES be used across a whole faculty to run objective structured clinical examinations and expedite personalised feedback from these. More recently (and strangely), re-invention has also seen SRES being used for university-wide contact tracing during COVID-19 because of its QR code functionality.

Re-invention, a key part of the innovation-decision process, has led to both new software features and new pedagogical approaches springing from (and be shared amongst) SRES’ expanding user base. Far from its simple beginnings as a mail merge and attendance tool, SRES now helps instructors get to know their students better, supports reflection, self-regulation, and teacher-student relationships, scales personalised feedback, assists with cohort management and support, and a whole lot more. The chart below outlines the co-evolution of SRES alongside instructors' needs and demands of data collection, curation, analysis, and use.

How do we design for diffusion?

What are the lessons we might be able to take away from learning analytics software such as SRES and AcaWriter? Allow me to offer two considerations:

- The software itself needs to allow for diffusibility. It needs to address real needs that instructors and students have. It also needs to afford a wide variety of uses so that instructors can start where they need to and branch out as they become more comfortable; it needs to be more than a one-function tool. Where possible, flexibility in the software that allows customisation and re-invention should be encouraged.

- The ecosystem around the software needs to support diffusion. This includes resources (e.g. guides), community (e.g. user groups, colleagues willing to share, developers working with instructors), support (e.g. learning design, training, workshops, troubleshooting), and multi-way communication (e.g. online community spaces, distribution lists).

In part 2 of this series, we’ll take a look at how co-creation, where users play an active role in the use, maintenance, and adaptation of a 'product', augments the innovation-decision process as part of the ‘irresistibility model’ to make learning analytics even more irresistible.

Learn more…

Arthars N., Dollinger M., Vigentini L., Liu D.YT., Kondo E., King D.M. (2019) Empowering Teachers to Personalize Learning Support. In: Ifenthaler D., Mah DK., Yau JK. (eds) Utilizing Learning Analytics to Support Study Success. Springer, Cham. https://doi.org/10.1007/978-3-319-64792-0_13

Dollinger, M., Liu, D., Arthars, N., & Lodge, J. (2019). Working Together in Learning Analytics Towards the Co-Creation of Value. Journal of Learning Analytics, 6(2), 10–26. https://doi.org/10.18608/jla.2019.62.2

Knight, S., Shibani, A., Abel, S., et al. (2020). AcaWriter: A Learning Analytics Tool for Formative Feedback on Academic Writing. Journal of Writing Research, 12, 1, 141-186. https://doi.org/10.17239/jowr-2020.12.01.06

Shibani, A., Knight, S. and Buckingham Shum, S. (2020). Educator Perspectives on Learning Analytics in Classroom Practice. The Internet and Higher Education, 46, 100730. https://doi.org/10.1016/j.iheduc.2020.100730