Mind the ‘pedagogical gap’ when preparing staff to use learning analytics

June 15, 2021

Keywords: dashboard design, data literacy, learning analytics, pedagogy, professional development

Target readers: Higher Education Leaders, Learning Analytics Practitioners, Learning Designers, Educators, Researchers

Author: Linda Corrin

Position: Director, Learning Transformations Unit and Associate Professor, Learning Analytics at Swinburne University of Technology (Melbourne, Australia)

Photo by bruce mars on Unsplash

Mind the ‘pedagogical gap’ when preparing staff to use learning analytics

When running workshops on the use of analytics in the University’s learning management system (LMS) I often see educators’ eyes light up when they see brightly coloured graphs and tables of data appear on the screen. They see these data representations as a gateway to previously inaccessible insights into how their students engage with content and learning activities. Interest and motivation to use the analytics is high and they are ready to dive in and learn more.

Yet, as the workshop progresses it becomes clear that some participants have difficulty interpreting this data in ways that allow them to determine specific actions to take. But why do some struggle to make sense of this student data?

To take one example, should the educator intervene directly with students the system highlights as struggling, or might a change to the learning design of the course be necessary? Some attribute such dilemmas about how to respond to analytics as a lack of data literacy, something which is increasingly being discussed in the learning analytics literature.

But could it also be attributed to a lack of understanding of the pedagogy behind the learning activity? What should we expect the data to show about how students to are engaging in a learning activity? Is there data missing that we need to complete the pedagogical picture?

In this post, I’ll explore data literacy — but also highlight the element often overlooked in these discussions, that is, the pedagogical gap.

The need for professional development for learning analytics

From its beginnings, the learning analytics community has acknowledged need for professional development to prepare staff to use learning analytics. In my own research my colleagues and I found it to be a key element for successful implementation of learning analytics in universities. However, when scanning the literature on new learning analytics systems or tools, mention of some form of training is often only brief, if included at all. That is not to say that training doesn’t exist — it’s just that we don’t talk about it in as much detail as we do the design and use of the tool itself.

At a workshop held by the ASCILITE Learning Analytics Special Interest Group in 2019, participants felt that institutions hadn’t invested sufficient time and resources into providing professional development opportunities for educators. They especially wanted to know how to make use of the analytics capabilities in both LMS and custom-built analytics systems. Participants also wanted insights from the learning analytics community about their approaches to professional learning about learning analytics.

Is it just about data literacy?

No.

Data literacy is important – it enables the educator to read the data or visualisation. However, for them to be able to choose the right course of action, other knowledge and skills are required.

For example, recently Gray and colleagues put together a Framework for continuous professional development in learning analytics. In this framework, they highlight skills necessary for educators to (1) know what they can see, (2) use and practice with the data to gain insight on students’ activity, and (3) take action. To do these three things educators need to be data literate, but also have knowledge and skills related to ethics and privacy, generating feedback, and reflection.

A strong pedagogical foundation

However, many discussions and frameworks on professional development for learning analytics are based on the assumption that educators already have a strong understanding of pedagogical design.

In my work with educators, I noticed that this foundational pedagogical knowledge is not always there. Consequently, this makes conversations regarding the link between the analytics and the pedagogy difficult.

Student learning and learning design are complex concepts which require careful study. However, many universities still don’t require staff to complete educational qualifications before they start teaching.

An understanding of foundational pedagogical concepts and core theories related to student learning can help all stakeholders who make decisions based on learning analytics data. This includes not only the educators, but also learning designers, learning analytics developers, and administrators. This awareness assists in determining different ways the data can be interpreted, and what additional information may be needed to make sense of data in certain contexts.

What can we do to address the gap?

For educators to be able to make good use of learning analytics we need to ensure that they can make the right connections between what they interpret from the data and actions that can improve learning. Aligning and integrating data literacy with existing pedagogical training is one way to address this pedagogical gap.

Rather than data literacy being addressed as something separate, integrating it into existing learning and teaching training can help educators better understand the link between their pedagogical design and the patterns of data that result.

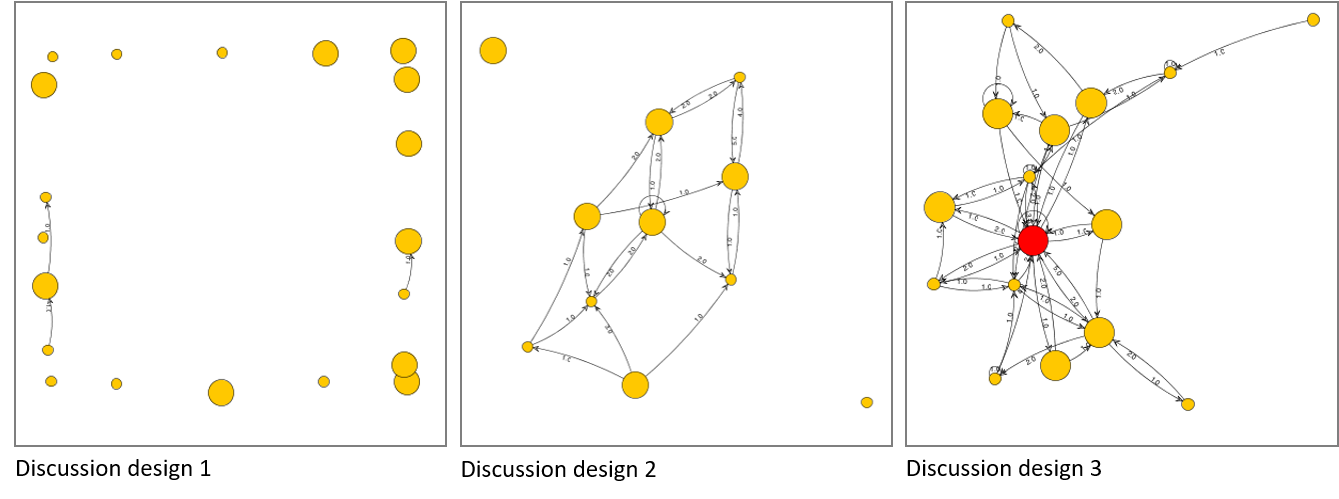

For example, at Swinburne University of Technology, we incorporate data and visualisations into our professional learning sessions to help introduce pedagogical concepts. In our workshop on designing and facilitating online discussions we start by showing participants different patterns of actual student interaction across a range of different discussion forum designs (using the social network diagrams shown below). With reference to what they observe in these diagrams, we then discuss the underlying principles and impacts that design decisions can have on how students engage with the learning activity.

The exposure to the data visualisation and the in-depth, guided discussion of what this means and how it relates to the learning design helps to bridge the pedagogical gap. This also prepares educators to be able to work with learning analytics data in their own context.

I also encourage institutions to view these approaches as part of a continuous professional learning plan. Learning analytics, and the field of education more broadly, are constantly evolving – so approaches to professional learning need to reflect that evolution. Building and maintaining communication and collaboration with educators around data literacy and pedagogy is critical to enabling new and innovative learning analytics initiatives to be implemented, shared, and improved over time.